chore(README): better readme, chart

This commit is contained in:

40

README.md

40

README.md

@@ -1,43 +1,17 @@

|

|||||||

sibe

|

sibe

|

||||||

====

|

====

|

||||||

|

|

||||||

A simple Machine Learning library.

|

A simple Machine Learning library.

|

||||||

|

|

||||||

A simple neural network:

|

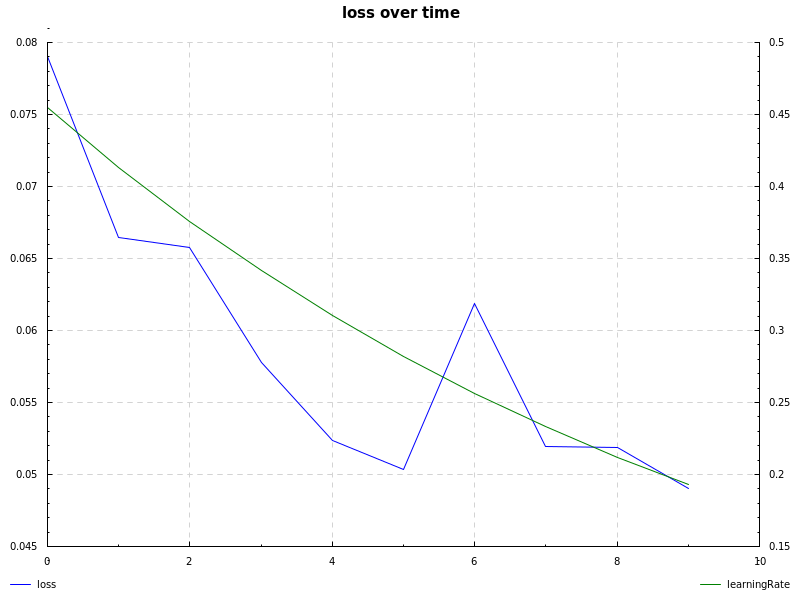

notMNIST dataset, cross-entropy loss, learning rate decay and sgd:

|

||||||

```haskell

|

|

||||||

module Main where

|

|

||||||

import Sibe

|

|

||||||

import Numeric.LinearAlgebra

|

|

||||||

import Data.List

|

|

||||||

|

|

||||||

main = do

|

See examples:

|

||||||

let learning_rate = 0.5

|

|

||||||

(iterations, epochs) = (2, 1000)

|

|

||||||

a = (logistic, logistic') -- activation function and the derivative

|

|

||||||

rnetwork = randomNetwork 0 2 [(8, a)] (1, a) -- two inputs, 8 nodes in a single hidden layer, 1 output

|

|

||||||

|

|

||||||

inputs = [vector [0, 1], vector [1, 0], vector [1, 1], vector [0, 0]] -- training dataset

|

|

||||||

labels = [vector [1], vector [1], vector [0], vector [0]] -- training labels

|

|

||||||

|

|

||||||

-- initial cost using crossEntropy method

|

|

||||||

initial_cost = zipWith crossEntropy (map (`forward` rnetwork) inputs) labels

|

|

||||||

|

|

||||||

-- train the network

|

|

||||||

network = session inputs rnetwork labels learning_rate (iterations, epochs)

|

|

||||||

|

|

||||||

-- run inputs through the trained network

|

|

||||||

-- note: here we are using the examples in the training dataset to test the network,

|

|

||||||

-- this is here just to demonstrate the way the library works, you should not do this

|

|

||||||

results = map (`forward` network) inputs

|

|

||||||

|

|

||||||

-- compute the new cost

|

|

||||||

cost = zipWith crossEntropy (map (`forward` network) inputs) labels

|

|

||||||

```

|

```

|

||||||

|

# neural network examples

|

||||||

See other examples:

|

|

||||||

```

|

|

||||||

# Simplest case of a neural network

|

|

||||||

stack exec example-xor

|

stack exec example-xor

|

||||||

|

stack exec example-424

|

||||||

|

# notMNIST dataset, achieves ~87% accuracy using exponential learning rate decay

|

||||||

|

stack exec example-notmnist

|

||||||

|

|

||||||

# Naive Bayes document classifier, using Reuters dataset

|

# Naive Bayes document classifier, using Reuters dataset

|

||||||

# using Porter stemming, stopword elimination and a few custom techniques.

|

# using Porter stemming, stopword elimination and a few custom techniques.

|

||||||

|

|||||||

@@ -42,7 +42,7 @@ module Main where

|

|||||||

|

|

||||||

let session = def { learningRate = 0.5

|

let session = def { learningRate = 0.5

|

||||||

, batchSize = 32

|

, batchSize = 32

|

||||||

, epochs = 35

|

, epochs = 24

|

||||||

, network = rnetwork

|

, network = rnetwork

|

||||||

, training = zip trinputs trlabels

|

, training = zip trinputs trlabels

|

||||||

, test = zip teinputs telabels

|

, test = zip teinputs telabels

|

||||||

|

|||||||

BIN

notmnist.png

BIN

notmnist.png

Binary file not shown.

|

Before Width: | Height: | Size: 24 KiB After Width: | Height: | Size: 28 KiB |

Reference in New Issue

Block a user