2016-07-29 11:56:50 +00:00

|

|

|

sibe

|

|

|

|

|

====

|

|

|

|

|

A simple Machine Learning library.

|

|

|

|

|

|

2016-09-09 20:50:08 +00:00

|

|

|

## Simple neural network

|

|

|

|

|

```haskell

|

|

|

|

|

let a = (sigmoid, sigmoid') -- activation function

|

|

|

|

|

-- random network, seed 0, values between -1 and 1,

|

|

|

|

|

-- two inputs, two nodes in hidden layer and a single output

|

|

|

|

|

rnetwork = randomNetwork 0 (-1, 1) 2 [(2, a)] (1, a)

|

|

|

|

|

|

|

|

|

|

-- inputs and labels

|

|

|

|

|

inputs = [vector [0, 1], vector [1, 0], vector [1, 1], vector [0, 0]]

|

|

|

|

|

labels = [vector [1], vector [1], vector [0], vector [0]]

|

|

|

|

|

|

|

|

|

|

-- define the session which includes parameters

|

|

|

|

|

session = def { network = rnetwork

|

|

|

|

|

, learningRate = 0.5

|

|

|

|

|

, epochs = 1000

|

|

|

|

|

, training = zip inputs labels

|

|

|

|

|

, test = zip inputs labels

|

|

|

|

|

} :: Session

|

|

|

|

|

|

|

|

|

|

initialCost = crossEntropy session

|

|

|

|

|

|

|

|

|

|

-- run gradient descent

|

|

|

|

|

-- you can also use `sgd`, see the notmnist example

|

|

|

|

|

newsession <- run gd session

|

|

|

|

|

|

|

|

|

|

let results = map (`forward` newsession) inputs

|

|

|

|

|

rounded = map (map round . toList) results

|

|

|

|

|

|

|

|

|

|

cost = crossEntropy newsession

|

|

|

|

|

|

|

|

|

|

putStrLn $ "- initial cost (cross-entropy): " ++ show initialCost

|

|

|

|

|

putStrLn $ "- actual result: " ++ show results

|

|

|

|

|

putStrLn $ "- rounded result: " ++ show rounded

|

|

|

|

|

putStrLn $ "- cost (cross-entropy): " ++ show cost

|

2016-07-29 11:56:50 +00:00

|

|

|

```

|

2016-09-09 20:50:08 +00:00

|

|

|

|

|

|

|

|

|

|

|

|

|

## Examples

|

|

|

|

|

```bash

|

2016-09-09 20:45:38 +00:00

|

|

|

# neural network examples

|

2016-07-29 11:56:50 +00:00

|

|

|

stack exec example-xor

|

2016-09-09 20:45:38 +00:00

|

|

|

stack exec example-424

|

2016-09-10 13:13:45 +00:00

|

|

|

# notMNIST dataset, achieves ~87.5% accuracy after 9 epochs (2 minutes)

|

2016-09-09 20:45:38 +00:00

|

|

|

stack exec example-notmnist

|

2016-08-09 11:34:57 +00:00

|

|

|

|

2016-08-20 20:37:01 +00:00

|

|

|

# Naive Bayes document classifier, using Reuters dataset

|

2016-08-09 11:34:57 +00:00

|

|

|

# using Porter stemming, stopword elimination and a few custom techniques.

|

2016-08-20 20:29:42 +00:00

|

|

|

# The dataset is imbalanced which causes the classifier to be biased towards some classes (earn, acq, ...)

|

|

|

|

|

# to workaround the imbalanced dataset problem, there is a --top-ten option which classifies only top 10 popular

|

2016-08-20 20:51:42 +00:00

|

|

|

# classes, with evenly split datasets (100 for each), this increases F Measure significantly, along with ~10% of improved accuracy

|

2016-08-09 11:34:57 +00:00

|

|

|

# N-Grams don't seem to help us much here (or maybe my implementation is wrong!), using bigrams increases

|

|

|

|

|

# accuracy, while decreasing F-Measure slightly.

|

|

|

|

|

stack exec example-naivebayes-doc-classifier -- --verbose

|

2016-08-20 20:29:42 +00:00

|

|

|

stack exec example-naivebayes-doc-classifier -- --verbose --top-ten

|

2016-07-29 11:56:50 +00:00

|

|

|

```

|

2016-09-09 20:52:09 +00:00

|

|

|

|

|

|

|

|

### notMNIST

|

|

|

|

|

|

2016-09-13 05:19:44 +00:00

|

|

|

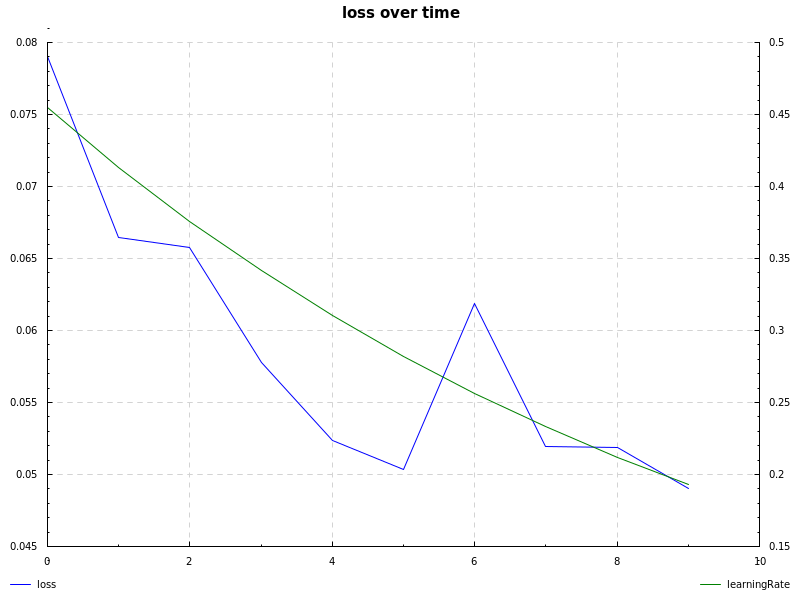

notMNIST dataset, sigmoid hidden layer, cross-entropy loss, learning rate decay and sgd ([`notmnist.hs`](https://github.com/mdibaiee/sibe/blob/master/examples/notmnist.hs)):

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

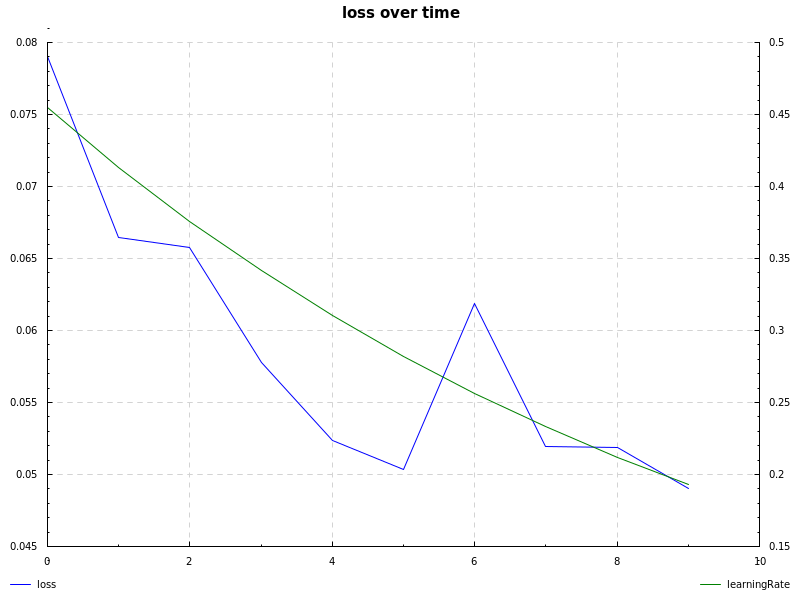

notMNIST dataset, relu hidden layer, cross-entropy loss, learning rate decay and sgd ([`notmnist.hs`](https://github.com/mdibaiee/sibe/blob/master/examples/notmnist.hs)):

|

2016-09-09 20:52:09 +00:00

|

|

|

|