relu: run notmnist using relu activation and draw the chart

[wip] word2vec: work in progress implementation of word2vec

This commit is contained in:

@@ -60,5 +60,8 @@ stack exec example-naivebayes-doc-classifier -- --verbose --top-ten

|

|||||||

|

|

||||||

### notMNIST

|

### notMNIST

|

||||||

|

|

||||||

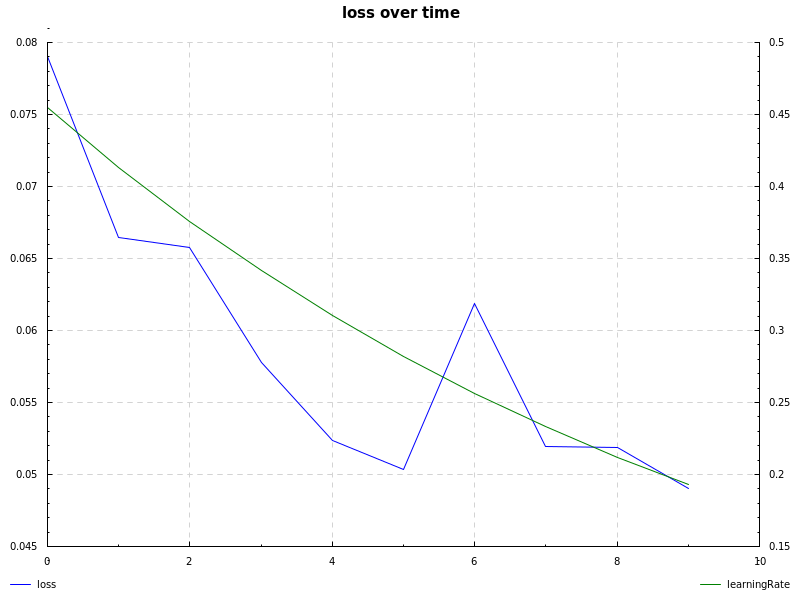

notMNIST dataset, cross-entropy loss, learning rate decay and sgd ([`notmnist.hs`](https://github.com/mdibaiee/sibe/blob/master/examples/notmnist.hs)):

|

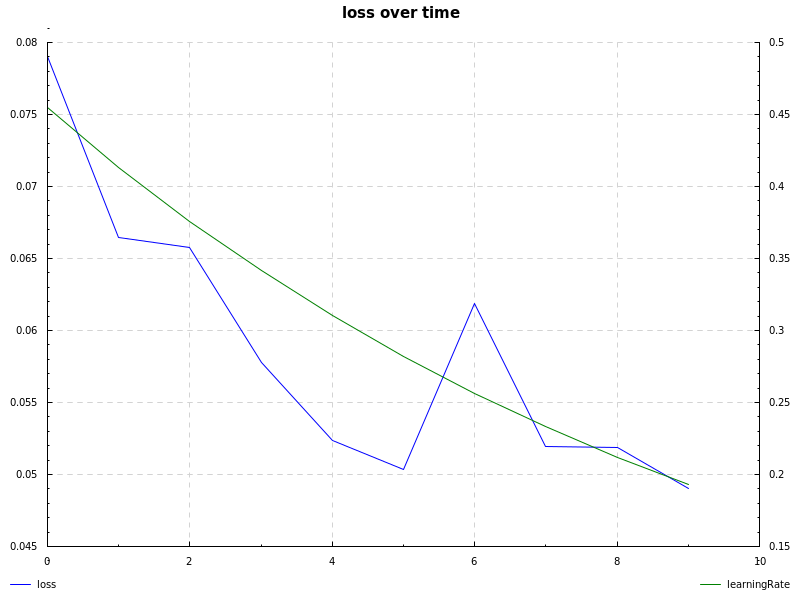

notMNIST dataset, sigmoid hidden layer, cross-entropy loss, learning rate decay and sgd ([`notmnist.hs`](https://github.com/mdibaiee/sibe/blob/master/examples/notmnist.hs)):

|

||||||

|

|

||||||

|

|

||||||

|

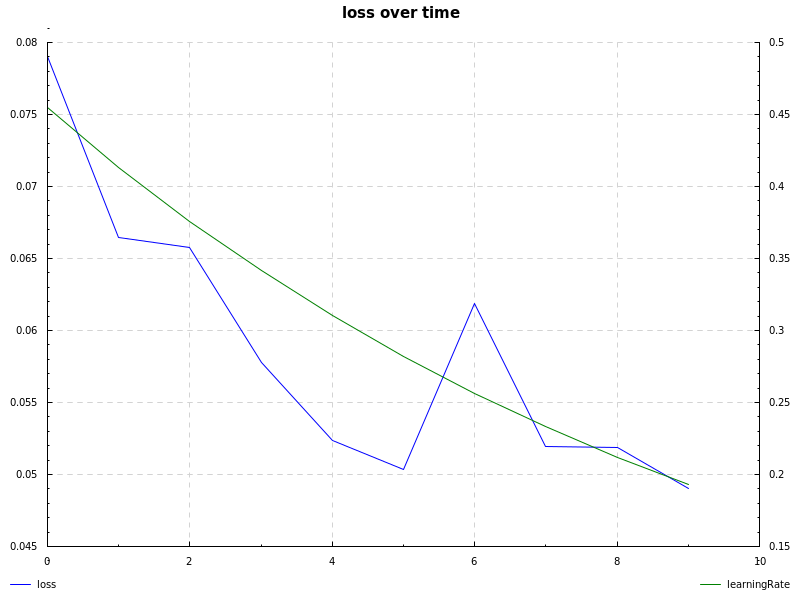

notMNIST dataset, relu hidden layer, cross-entropy loss, learning rate decay and sgd ([`notmnist.hs`](https://github.com/mdibaiee/sibe/blob/master/examples/notmnist.hs)):

|

||||||

|

|

||||||

|

|||||||

@@ -43,7 +43,7 @@ module Main where

|

|||||||

|

|

||||||

let session = def { learningRate = 0.5

|

let session = def { learningRate = 0.5

|

||||||

, batchSize = 32

|

, batchSize = 32

|

||||||

, epochs = 10

|

, epochs = 9

|

||||||

, network = rnetwork

|

, network = rnetwork

|

||||||

, training = zip trinputs trlabels

|

, training = zip trinputs trlabels

|

||||||

, test = zip teinputs telabels

|

, test = zip teinputs telabels

|

||||||

|

|||||||

34

examples/word2vec.hs

Normal file

34

examples/word2vec.hs

Normal file

@@ -0,0 +1,34 @@

|

|||||||

|

{-# LANGUAGE RecordWildCards #-}

|

||||||

|

{-# LANGUAGE FlexibleContexts #-}

|

||||||

|

{-# LANGUAGE ScopedTypeVariables #-}

|

||||||

|

|

||||||

|

module Main where

|

||||||

|

import Sibe

|

||||||

|

import Sibe.Word2Vec

|

||||||

|

import Sibe.Utils

|

||||||

|

import Data.Default.Class

|

||||||

|

import qualified Data.Vector.Storable as V

|

||||||

|

import Data.List (sortBy)

|

||||||

|

import Data.Function (on)

|

||||||

|

import Numeric.LinearAlgebra

|

||||||

|

import System.IO

|

||||||

|

import Data.List.Split

|

||||||

|

|

||||||

|

main = do

|

||||||

|

{-ds <- do

|

||||||

|

content <- readFile "examples/doc-classifier-data/data-reuters"

|

||||||

|

let splitted = splitOn (replicate 10 '-' ++ "\n") content

|

||||||

|

d = concatMap (tail . lines) (take 100 splitted)

|

||||||

|

return d-}

|

||||||

|

let ds = ["I like deep learning", "I like NLP", "I enjoy flying"]

|

||||||

|

|

||||||

|

let session = def { learningRate = 0.8

|

||||||

|

, batchSize = 10

|

||||||

|

, epochs = 1000

|

||||||

|

} :: Session

|

||||||

|

w2v = def { docs = ds }:: Word2Vec

|

||||||

|

|

||||||

|

|

||||||

|

r <- word2vec w2v session

|

||||||

|

{-print r-}

|

||||||

|

return ()

|

||||||

BIN

notmnist-relu.png

Normal file

BIN

notmnist-relu.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 32 KiB |

BIN

notmnist.png

BIN

notmnist.png

Binary file not shown.

|

Before Width: | Height: | Size: 33 KiB After Width: | Height: | Size: 29 KiB |

BIN

sgd.png

BIN

sgd.png

Binary file not shown.

|

Before Width: | Height: | Size: 31 KiB After Width: | Height: | Size: 29 KiB |

14

sibe.cabal

14

sibe.cabal

@@ -15,7 +15,7 @@ cabal-version: >=1.10

|

|||||||

|

|

||||||

library

|

library

|

||||||

hs-source-dirs: src

|

hs-source-dirs: src

|

||||||

exposed-modules: Sibe, Sibe.NaiveBayes, Sibe.NLP

|

exposed-modules: Sibe, Sibe.NaiveBayes, Sibe.NLP, Sibe.Word2Vec, Sibe.Utils

|

||||||

build-depends: base >= 4.7 && < 5

|

build-depends: base >= 4.7 && < 5

|

||||||

, hmatrix

|

, hmatrix

|

||||||

, random

|

, random

|

||||||

@@ -52,6 +52,18 @@ executable example-xor

|

|||||||

, data-default-class

|

, data-default-class

|

||||||

default-language: Haskell2010

|

default-language: Haskell2010

|

||||||

|

|

||||||

|

executable example-word2vec

|

||||||

|

hs-source-dirs: examples

|

||||||

|

main-is: word2vec.hs

|

||||||

|

ghc-options: -threaded -rtsopts -with-rtsopts=-N

|

||||||

|

build-depends: base

|

||||||

|

, sibe

|

||||||

|

, hmatrix

|

||||||

|

, data-default-class

|

||||||

|

, split

|

||||||

|

, vector

|

||||||

|

default-language: Haskell2010

|

||||||

|

|

||||||

--executable example-sin

|

--executable example-sin

|

||||||

--hs-source-dirs: examples

|

--hs-source-dirs: examples

|

||||||

--main-is: sin.hs

|

--main-is: sin.hs

|

||||||

|

|||||||

72

src/Sibe.hs

72

src/Sibe.hs

@@ -10,8 +10,10 @@ module Sibe

|

|||||||

Output,

|

Output,

|

||||||

Activation,

|

Activation,

|

||||||

forward,

|

forward,

|

||||||

|

runLayer,

|

||||||

randomLayer,

|

randomLayer,

|

||||||

randomNetwork,

|

randomNetwork,

|

||||||

|

buildNetwork,

|

||||||

saveNetwork,

|

saveNetwork,

|

||||||

loadNetwork,

|

loadNetwork,

|

||||||

train,

|

train,

|

||||||

@@ -30,7 +32,9 @@ module Sibe

|

|||||||

replaceVector,

|

replaceVector,

|

||||||

Session(..),

|

Session(..),

|

||||||

accuracy,

|

accuracy,

|

||||||

learningRateDecay

|

learningRateDecay,

|

||||||

|

ignoreBiases,

|

||||||

|

one

|

||||||

) where

|

) where

|

||||||

import Numeric.LinearAlgebra

|

import Numeric.LinearAlgebra

|

||||||

import System.Random

|

import System.Random

|

||||||

@@ -62,7 +66,12 @@ module Sibe

|

|||||||

|

|

||||||

data Network = O Layer

|

data Network = O Layer

|

||||||

| Layer :- Network

|

| Layer :- Network

|

||||||

deriving (Show)

|

|

||||||

|

instance Show Network where

|

||||||

|

show (Layer biases nodes _ :- n) =

|

||||||

|

(show . length $ toLists nodes) ++ "x" ++ (show . length . head . toLists $ nodes) ++ " " ++ (show . length . toList $ biases) ++ " :- " ++ show n

|

||||||

|

show (O (Layer biases nodes _)) =

|

||||||

|

(show . length $ toLists nodes) ++ "x" ++ (show . length . head . toLists $ nodes) ++ " " ++ (show . length . toList $ biases)

|

||||||

|

|

||||||

infixr 5 :-

|

infixr 5 :-

|

||||||

|

|

||||||

@@ -75,7 +84,7 @@ module Sibe

|

|||||||

, batchSize :: Int

|

, batchSize :: Int

|

||||||

, chart :: [(Int, Double, Double)]

|

, chart :: [(Int, Double, Double)]

|

||||||

, momentum :: Double

|

, momentum :: Double

|

||||||

}

|

} deriving (Show)

|

||||||

|

|

||||||

emptyNetwork = randomNetwork 0 (0, 0) 0 [] (0, (id, id))

|

emptyNetwork = randomNetwork 0 (0, 0) 0 [] (0, (id, id))

|

||||||

instance Default Session where

|

instance Default Session where

|

||||||

@@ -132,6 +141,13 @@ module Sibe

|

|||||||

randomLayer seed (input, h) bound a :-

|

randomLayer seed (input, h) bound a :-

|

||||||

randomNetwork (seed + 1) bound h hs output

|

randomNetwork (seed + 1) bound h hs output

|

||||||

|

|

||||||

|

buildNetwork :: Seed -> (Double, Double) -> Int -> [(Int, Int, Activation)] -> (Int, Int, Activation) -> Network

|

||||||

|

buildNetwork seed bound input [] (outputRows, outputColumns, a) =

|

||||||

|

O $ randomLayer seed (input, outputColumns) bound a

|

||||||

|

buildNetwork seed bound input ((rows, columns, a):hs) output =

|

||||||

|

randomLayer seed (input, columns) bound a :-

|

||||||

|

buildNetwork (seed + 1) bound columns hs output

|

||||||

|

|

||||||

sigmoid :: Vector Double -> Vector Double

|

sigmoid :: Vector Double -> Vector Double

|

||||||

sigmoid x = 1 / max (1 + exp (-x)) 1e-10

|

sigmoid x = 1 / max (1 + exp (-x)) 1e-10

|

||||||

|

|

||||||

@@ -172,6 +188,9 @@ module Sibe

|

|||||||

crossEntropy' :: Vector Double -> Vector Double

|

crossEntropy' :: Vector Double -> Vector Double

|

||||||

crossEntropy' x = 1 / fromIntegral (V.length x)

|

crossEntropy' x = 1 / fromIntegral (V.length x)

|

||||||

|

|

||||||

|

one :: Vector Double -> Vector Double

|

||||||

|

one v = vector $ replicate (V.length v) 1

|

||||||

|

|

||||||

train :: Input

|

train :: Input

|

||||||

-> Network

|

-> Network

|

||||||

-> Output -- target

|

-> Output -- target

|

||||||

@@ -210,46 +229,6 @@ module Sibe

|

|||||||

-- pass = weights #> de

|

-- pass = weights #> de

|

||||||

in (layer :- n', pass)

|

in (layer :- n', pass)

|

||||||

|

|

||||||

{-trainMomentum :: Input

|

|

||||||

-> Network

|

|

||||||

-> Output -- target

|

|

||||||

-> Double -- learning rate

|

|

||||||

-> (Double, Double) -- momentum

|

|

||||||

-> Network -- network's output

|

|

||||||

trainMomentum input network target alpha (m, v) = fst $ run input network

|

|

||||||

where

|

|

||||||

run :: Input -> Network -> (Network, Vector Double)

|

|

||||||

run input (O l@(Layer biases weights (fn, fn'))) =

|

|

||||||

let y = runLayer input l

|

|

||||||

o = fn y

|

|

||||||

delta = o - target

|

|

||||||

de = delta * fn' y

|

|

||||||

v =

|

|

||||||

-- de = delta -- cross entropy cost

|

|

||||||

|

|

||||||

biases' = biases - scale alpha de

|

|

||||||

weights' = weights - scale alpha (input `outer` de) -- small inputs learn slowly

|

|

||||||

layer = Layer biases' weights' (fn, fn') -- updated layer

|

|

||||||

|

|

||||||

pass = weights #> de

|

|

||||||

-- pass = weights #> de

|

|

||||||

|

|

||||||

in (O layer, pass)

|

|

||||||

run input (l@(Layer biases weights (fn, fn')) :- n) =

|

|

||||||

let y = runLayer input l

|

|

||||||

o = fn y

|

|

||||||

(n', delta) = run o n

|

|

||||||

|

|

||||||

de = delta * fn' y

|

|

||||||

|

|

||||||

biases' = biases - cmap (*alpha) de

|

|

||||||

weights' = weights - cmap (*alpha) (input `outer` de)

|

|

||||||

layer = Layer biases' weights' (fn, fn')

|

|

||||||

|

|

||||||

pass = weights #> de

|

|

||||||

-- pass = weights #> de

|

|

||||||

in (layer :- n', pass)-}

|

|

||||||

|

|

||||||

gd :: Session -> IO Session

|

gd :: Session -> IO Session

|

||||||

gd session = do

|

gd session = do

|

||||||

seed <- newStdGen

|

seed <- newStdGen

|

||||||

@@ -324,6 +303,13 @@ module Sibe

|

|||||||

learningRateDecay (step, m) session =

|

learningRateDecay (step, m) session =

|

||||||

session { learningRate = max m $ learningRate session / step }

|

session { learningRate = max m $ learningRate session / step }

|

||||||

|

|

||||||

|

ignoreBiases :: Session -> Session

|

||||||

|

ignoreBiases session =

|

||||||

|

session { network = rmbias (network session) }

|

||||||

|

where

|

||||||

|

rmbias (O (Layer nodes biases a)) = O $ Layer nodes (biases * 0) a

|

||||||

|

rmbias ((Layer nodes biases a) :- n) = Layer nodes (biases * 0) a :- rmbias n

|

||||||

|

|

||||||

run :: (Session -> IO Session)

|

run :: (Session -> IO Session)

|

||||||

-> Session -> IO Session

|

-> Session -> IO Session

|

||||||

run fn session = foldM (\s i -> fn s) session [0..epochs session]

|

run fn session = foldM (\s i -> fn s) session [0..epochs session]

|

||||||

|

|||||||

@@ -1,7 +1,6 @@

|

|||||||

module Sibe.NLP

|

module Sibe.NLP

|

||||||

(Class,

|

(Class,

|

||||||

Document(..),

|

Document(..),

|

||||||

ordNub,

|

|

||||||

accuracy,

|

accuracy,

|

||||||

recall,

|

recall,

|

||||||

precision,

|

precision,

|

||||||

@@ -14,15 +13,16 @@ module Sibe.NLP

|

|||||||

ngramText,

|

ngramText,

|

||||||

)

|

)

|

||||||

where

|

where

|

||||||

|

import Sibe.Utils

|

||||||

import Data.List

|

import Data.List

|

||||||

import Debug.Trace

|

import Debug.Trace

|

||||||

import qualified Data.Set as Set

|

|

||||||

import Data.List.Split

|

import Data.List.Split

|

||||||

import Data.Maybe

|

import Data.Maybe

|

||||||

import Control.Arrow ((&&&))

|

import Control.Arrow ((&&&))

|

||||||

import Text.Regex.PCRE

|

import Text.Regex.PCRE

|

||||||

import Data.Char (isSpace, isNumber, toLower)

|

import Data.Char (isSpace, isNumber, toLower)

|

||||||

import NLP.Stemmer

|

import NLP.Stemmer

|

||||||

|

import qualified Data.Set as Set

|

||||||

|

|

||||||

type Class = Int;

|

type Class = Int;

|

||||||

|

|

||||||

@@ -73,13 +73,6 @@ module Sibe.NLP

|

|||||||

|

|

||||||

concatDocs = concatMap (\(Document text _) -> text ++ " ")

|

concatDocs = concatMap (\(Document text _) -> text ++ " ")

|

||||||

|

|

||||||

ordNub :: (Ord a) => [a] -> [a]

|

|

||||||

ordNub = go Set.empty

|

|

||||||

where

|

|

||||||

go _ [] = []

|

|

||||||

go s (x:xs) = if x `Set.member` s then go s xs

|

|

||||||

else x : go (Set.insert x s) xs

|

|

||||||

|

|

||||||

accuracy :: [(Int, (Int, Double))] -> Double

|

accuracy :: [(Int, (Int, Double))] -> Double

|

||||||

accuracy results =

|

accuracy results =

|

||||||

let pairs = map (\(a, b) -> (a, fst b)) results

|

let pairs = map (\(a, b) -> (a, fst b)) results

|

||||||

|

|||||||

@@ -4,7 +4,6 @@ module Sibe.NaiveBayes

|

|||||||

initialize,

|

initialize,

|

||||||

run,

|

run,

|

||||||

session,

|

session,

|

||||||

ordNub,

|

|

||||||

accuracy,

|

accuracy,

|

||||||

precision,

|

precision,

|

||||||

recall,

|

recall,

|

||||||

@@ -19,6 +18,7 @@ module Sibe.NaiveBayes

|

|||||||

removeStopwords,

|

removeStopwords,

|

||||||

)

|

)

|

||||||

where

|

where

|

||||||

|

import Sibe.Utils

|

||||||

import Sibe.NLP

|

import Sibe.NLP

|

||||||

import Data.List

|

import Data.List

|

||||||

import Debug.Trace

|

import Debug.Trace

|

||||||

|

|||||||

24

src/Sibe/Utils.hs

Normal file

24

src/Sibe/Utils.hs

Normal file

@@ -0,0 +1,24 @@

|

|||||||

|

module Sibe.Utils

|

||||||

|

(similarity,

|

||||||

|

ordNub,

|

||||||

|

onehot

|

||||||

|

) where

|

||||||

|

import qualified Data.Vector.Storable as V

|

||||||

|

import qualified Data.Set as Set

|

||||||

|

import Numeric.LinearAlgebra

|

||||||

|

|

||||||

|

similarity :: Vector Double -> Vector Double -> Double

|

||||||

|

similarity a b = (V.sum $ a * b) / (magnitude a * magnitude b)

|

||||||

|

where

|

||||||

|

magnitude :: Vector Double -> Double

|

||||||

|

magnitude v = sqrt $ V.sum (cmap (^2) v)

|

||||||

|

|

||||||

|

onehot :: Int -> Int -> Vector Double

|

||||||

|

onehot len i = vector $ replicate i 0 ++ [1] ++ replicate (len - i - 1) 0

|

||||||

|

|

||||||

|

ordNub :: (Ord a) => [a] -> [a]

|

||||||

|

ordNub = go Set.empty

|

||||||

|

where

|

||||||

|

go _ [] = []

|

||||||

|

go s (x:xs) = if x `Set.member` s then go s xs

|

||||||

|

else x : go (Set.insert x s) xs

|

||||||

103

src/Sibe/Word2Vec.hs

Normal file

103

src/Sibe/Word2Vec.hs

Normal file

@@ -0,0 +1,103 @@

|

|||||||

|

module Sibe.Word2Vec

|

||||||

|

(word2vec,

|

||||||

|

Word2Vec (..)

|

||||||

|

) where

|

||||||

|

import Sibe

|

||||||

|

import Sibe.NLP

|

||||||

|

import Sibe.Utils

|

||||||

|

import Debug.Trace

|

||||||

|

import Data.Char

|

||||||

|

import Data.Maybe

|

||||||

|

import Data.List

|

||||||

|

import Numeric.LinearAlgebra hiding (find)

|

||||||

|

import qualified Data.Vector.Storable as V

|

||||||

|

import Data.Default.Class

|

||||||

|

import Data.Function (on)

|

||||||

|

|

||||||

|

data Word2Vec = Word2Vec { docs :: [String]

|

||||||

|

, window :: Int

|

||||||

|

}

|

||||||

|

instance Default Word2Vec where

|

||||||

|

def = Word2Vec { docs = []

|

||||||

|

, window = 2

|

||||||

|

}

|

||||||

|

|

||||||

|

word2vec w2v session = do

|

||||||

|

return trainingData

|

||||||

|

let s = session { training = trainingData

|

||||||

|

, network = buildNetwork 0 (-1, 1) v [(v, 25, (id, one))] (20, v, (softmax, crossEntropy'))

|

||||||

|

}

|

||||||

|

print trainingData

|

||||||

|

newses <- run (gd . learningRateDecay (1.1, 0.1) . ignoreBiases) s

|

||||||

|

|

||||||

|

let (hidden@(Layer biases nodes _) :- _) = network newses

|

||||||

|

{-let computedVocVec = map (\(w, v) -> (w, forward v newses)) vocvec-}

|

||||||

|

let computedVocVec = map (\(w, v) -> (w, (fromRows [v]) <> nodes)) vocvec

|

||||||

|

print computedVocVec

|

||||||

|

|

||||||

|

{-mapM_ (\(w, v) -> do

|

||||||

|

putStr $ w ++ ": "

|

||||||

|

let similarities = map (similarity v . snd) computedVocVec

|

||||||

|

let sorted = sortBy (compare `on` similarity v . snd) computedVocVec

|

||||||

|

{-print $ zip (map fst sorted) similarities-}

|

||||||

|

print . take 2 . drop 1 . reverse $ map fst sorted

|

||||||

|

) computedVocVec-}

|

||||||

|

|

||||||

|

return newses

|

||||||

|

where

|

||||||

|

ws = words (concatMap ((++ " <start> ") . map toLower) (docs w2v))

|

||||||

|

vocabulary = ordNub ws

|

||||||

|

v = length vocabulary

|

||||||

|

|

||||||

|

cooccurence = foldl' iter [] (zip [0..] ws)

|

||||||

|

where

|

||||||

|

iter acc (i, w) =

|

||||||

|

let a = findIndex ((== w) . fst) acc

|

||||||

|

before = take (window w2v) . drop (i - window w2v) $ ws

|

||||||

|

after = take (window w2v) . drop (i + 1) $ ws

|

||||||

|

ns = if i == 0 then after else before ++ after

|

||||||

|

in

|

||||||

|

if isJust a then

|

||||||

|

let idx = fromJust a

|

||||||

|

new = foldl (\acc n -> add acc n) (snd $ acc !! idx) ns

|

||||||

|

in take idx acc ++ [(w, new)] ++ drop (idx + 1) acc

|

||||||

|

else

|

||||||

|

acc ++ [(w, map (\n -> (n, 1)) ns)]

|

||||||

|

|

||||||

|

add [] n = [(n, 1)]

|

||||||

|

add ((hw, hc):hs) n

|

||||||

|

| n == hw = (hw, hc + 1):hs

|

||||||

|

| otherwise = (hw, hc):add hs n

|

||||||

|

|

||||||

|

vocvec = zip vocabulary $ map (onehot v) [0..v - 1]

|

||||||

|

{-trainingData = map iter cooccurence

|

||||||

|

where

|

||||||

|

iter (w, targets) =

|

||||||

|

let ts = map (\(w, c) -> c * (snd . fromJust $ find ((== w) . fst) vocvec)) targets

|

||||||

|

folded = foldl (+) (vector $ replicate v 0) ts

|

||||||

|

input = snd . fromJust $ find ((== w) . fst) vocvec

|

||||||

|

in (input, folded)-}

|

||||||

|

trainingData = map iter $ zip [window w2v..length vocvec - window w2v] vocvec

|

||||||

|

where

|

||||||

|

iter (i, (w, v)) =

|

||||||

|

let before = take (window w2v) . drop (i - window w2v) $ vocvec

|

||||||

|

after = take (window w2v) . drop (i + 1) $ vocvec

|

||||||

|

ns = map snd $ before ++ after

|

||||||

|

new = foldl1 (+) ns

|

||||||

|

in (v, new)

|

||||||

|

|

||||||

|

add [] n = [(n, 1)]

|

||||||

|

add ((hw, hc):hs) n

|

||||||

|

| n == hw = (hw, hc + 1):hs

|

||||||

|

| otherwise = (hw, hc):add hs n

|

||||||

|

|

||||||

|

wordfrequency = foldl' iter [] ws

|

||||||

|

where

|

||||||

|

iter acc w =

|

||||||

|

let i = findIndex ((== w) . fst) acc

|

||||||

|

in

|

||||||

|

if isJust i then

|

||||||

|

let idx = fromJust i

|

||||||

|

in take idx acc ++ [(w, snd (acc !! idx) + 1)] ++ drop (idx + 1) acc

|

||||||

|

else

|

||||||

|

acc ++ [(w, 1)]

|

||||||

44

src/Sibe/Word2Vec.hs.backup

Normal file

44

src/Sibe/Word2Vec.hs.backup

Normal file

@@ -0,0 +1,44 @@

|

|||||||

|

module Sibe.Word2Vec

|

||||||

|

(word2vec,

|

||||||

|

mapTuple

|

||||||

|

) where

|

||||||

|

import Sibe

|

||||||

|

import Sibe.NLP

|

||||||

|

import Debug.Trace

|

||||||

|

import Data.Char

|

||||||

|

import Data.Maybe

|

||||||

|

import Data.List

|

||||||

|

import Numeric.LinearAlgebra hiding (find)

|

||||||

|

import qualified Data.Vector.Storable as V

|

||||||

|

|

||||||

|

word2vec docs session = do

|

||||||

|

let cooccurence = concat $ map co docs

|

||||||

|

a = (sigmoid, sigmoid')

|

||||||

|

o = (softmax, crossEntropy')

|

||||||

|

window = 2

|

||||||

|

s = session { training = cooccurence

|

||||||

|

, test = cooccurence

|

||||||

|

, network = buildNetwork 0 (-1, 1) n [(n, 300, (id, id))] (300, n, (softmax, crossEntropy'))

|

||||||

|

}

|

||||||

|

print $ network s

|

||||||

|

newses <- run gd s

|

||||||

|

return (newses, cooccurence, vocabulary, vocvec)

|

||||||

|

where

|

||||||

|

n = length vocabulary

|

||||||

|

vocabulary = ordNub . words . map toLower . concatMap (++ " ") $ docs

|

||||||

|

vocvec = zip vocabulary $ map tovec [0..n]

|

||||||

|

tovec i = replicate i 0 ++ [1] ++ replicate (n - i - 1) 0

|

||||||

|

co d =

|

||||||

|

let p = pairs d

|

||||||

|

in map (\(a, [b, c]) -> (f a, V.concat [f b, f c])) p

|

||||||

|

where

|

||||||

|

f w = vector . snd . fromJust $ find ((== w) . fst) vocvec

|

||||||

|

pairs d = concatMap iter [0..length ws]

|

||||||

|

where

|

||||||

|

ws = words $ map toLower d

|

||||||

|

iter i

|

||||||

|

| i > 0 && i < length ws - 1 = [(ws !! i, [ws !! (i - 1), ws !! (i + 1)])]

|

||||||

|

| otherwise = []

|

||||||

|

|

||||||

|

mapTuple :: (a -> b) -> (a, a) -> (b, b)

|

||||||

|

mapTuple f (a, b) = (f a, f b)

|

||||||

Reference in New Issue

Block a user